Collecting quantitative feedback at scale.

Surveys are a quicker way to collect the feedback from users and potential users, but it’s not nearly as in-depth. You can get responses in a matter of minutes or hours instead of the hour long user interview per user, plus time to gather insights.

Surveys are often used to follow up on research from user interviews. A follow up survey after conducting user interviews can help confirm those response patterns that were observed in the user interview.

Another reason to conduct surveys is to collect recurring feedback over time. For instance, if you were to set up an automated survey that was emailed after the user took an action such as finishing onboarding, their first purchase, or after they’ve been your customer for a year, you’d collect a lot of data over time.

Lastly, if data is urgent, a sent survey in the morning can have hundreds of responses by the afternoon, which means that you could have enough responses to formulate your ideas and next steps.

Interested in what it takes to conduct a user survey? Below are the high level steps for your team to go through, along with additional resources and videos to review for deep dives.

Start with an internal discussion to define the questions your team wants to uncover the answers for. These questions could be business related, or user challenge related.

Once you know the questions you want to answer, you then need to talk about the various audience and customer segments you would like to include in your sample.

Think through who would be the best people to send this survey to in order to get a response that is representative to the wider group or audience.

The segmentation characteristics will help determine where the survey is promoted and what questions to include in the screener.

With your internal questions in hand, you can then start developing questions to ask participants in order to uncover the deep seated answers hidden within your audience.

Generally speaking, it's important to keep your survey short and to the point. Only ask essential questions and avoid "nice to have" questions. Long surveys will not only cause drop offs, but also potentially skew results due to respondentfatigue.

Developing questions that poke at the answers you're trying to uncover without biasing the response is a true art and science.

Here are some resources to check out specific to developing your questions on the survey.

Book: Improving Survey Questions

PDF: Survey Fundamentals (UW Madison)

Resource Page: Questionnaire Design

There are a number of different ways to collect survey respones. The two most common are direct survey and an in-line page survey.

Direct Survey

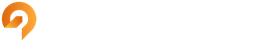

A direct survey is one created in a survey program and sent out to a large audience to complete. Here's an example of what you could create and send using a tool such as Survey Monkey or TypeForm.

One benefit of emailing out a direct survey is that you can create variations or entirely different surveys and send them to specific, targeted segments of your contacts.

Additionally, these surveys can also include screener questions and can have more questions.

In-Line Page Survey

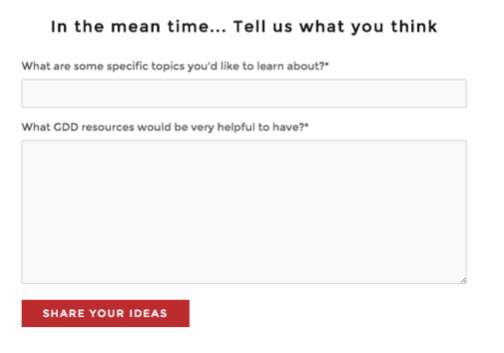

Another way to conduct a survey is with an on-page survey. Here's an example from the beta "Growth-Driven Design blueprint course".

Upon signup, questions would be asked on the thank you page. Having the questions embedded directly on the thank you page is an easy way to collect information in a user-friendly way.

Upon signup, questions would be asked on the thank you page. Having the questions embedded directly on the thank you page is an easy way to collect information in a user-friendly way.

This feedback helped inform what kinds of things should be built onto the site by asking users what specific topics they’d like to learn about and what resources would be helpful.

Below is a video that can help you determine different ways to source participants who fit your selected sample.

Once you have recieved enough responses to feel confident in the responses (the number will vary), it's time to step back and review the results.

Not only should you review the data as a whole, you should also mix/match/sort based on various responses to questions and screener information to see if there are new and interesting patterns your team didn't expect.

Return back to the original questions your team posed and see what patterns you identified in the survey that may help answer them.

Eventually return back to the original questions your team put together to see what patterns could possibly answer them, and/or what additional research may be needed.

It's common to include a question at the end of your survey, "Would you be interested in chatting with one of our team members about your experience? If so, please enter your email."

This will give you a great list of individuals you can select to conduct user interviews. This will allow you to dive deeper into responses you find interesting.

Want to learn more about conducting user interviews? Review our step-by-step guide for conducting user interviews.

You can also add a similar question but around beta testing if you are trying to source a group of qualified, good-fit beta testers.

of the community you're here to serve.

Tweet thisFree certification covering the Growth-Driven Design methodology for building and optimizing a peak performing website. Lays out processes for UX research and includes many tools and templates.

It's common for surveys to have easy-to-fix mistakes like misleading questions, imporper sampling, and skewed rating scales.

This blog by Elizabeth Ferrall of Google Ventures outlines easy improvements and gives some deep dive books to check out at the end.

One of the leaders in conducting online surveys, Survey Monkey, has compiled a helpful resource on "how to conduct a survey" and included a number of examples and templates for various types of surveys.